Integrated Solutions. Proven Performance. Reliable Results.

Customer Perspectives

"Thank you for the fine connective system from Leica Biosystems, the systems well match the pathology works and studies at Kurume University. Recently we have tried to do case studies with hematologists and hematopathologists from over 20 hospitals and universities, using the virtual slide systems. The virtual slides work well in the web meetings, allowing good communication with the hematologists and hematopathologists. We are looking forward to collaborating with Leica Biosystems with Digital Pathology in the future."

Translated from source language.

Professor Koichi Ohshima PhD MD

Department of Pathology, School of Medicine, Kurume University

"Leica Biosystems products are reliable and high quality, and the company is realistic about what's required to transform traditional pathology to a digital, connected practice. I know I can count on them and trust they will be there to support me now and well into the future."

Translated from source language.

Dr. Sylvia L. Asa, MD, PhD

Consultant in Endocrine Pathology, University Hospitals Cleveland Medical Center

News & Promotions

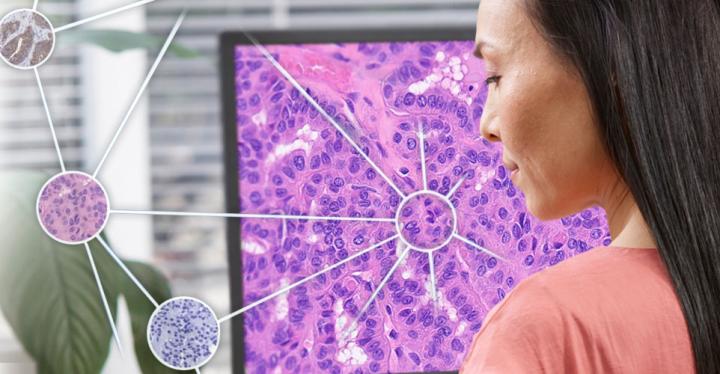

With over 25 years of Digital Pathology innovation, Leica Biosystems delivers performance and reliability.

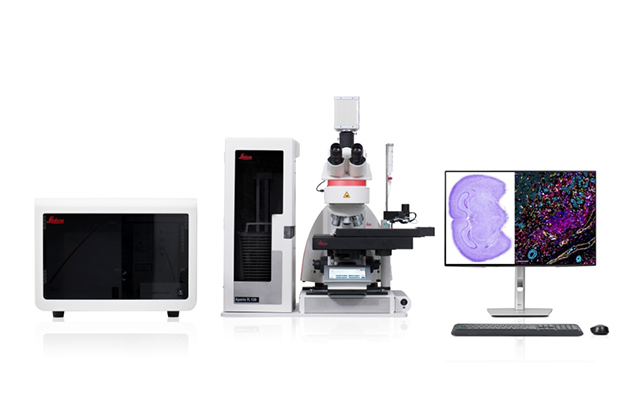

Aperio FL Scanning System – Brightfield, Fluorescent, and FISH Whole Slide Imaging in One Powerful System

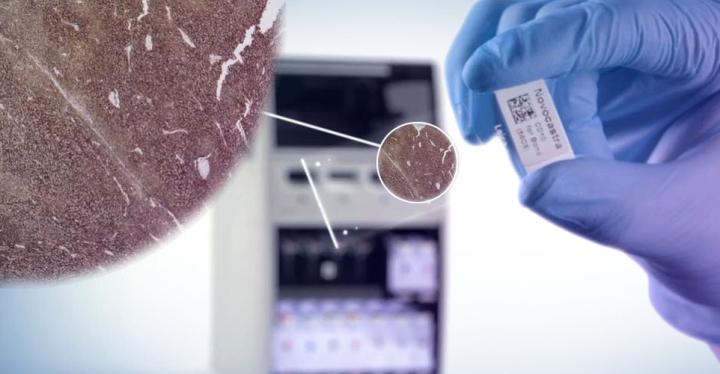

Experience the Aperio FL Scanning Systems - multi-modal digital pathology scanners for brightfield, fluorescence, and FISH imaging. Fast, flexible, and intuitive, they deliver stunning high-resolution whole slide images with just one click.

For research use only. Not for use in diagnostic procedures.

Learn More

Leica Biosystems Partners with AstraZeneca to Advance Computational Diagnostics

The partnership focuses on driving global adoption of quantitative diagnostics in non-small cell lung cancer. This collaboration leverages our digital pathology leadership to accelerate the advancement of computational pathology tools.

Leica Biosystems launches HistoCore CHROMAX Workstation

Leave tedious manual process steps behind and enter a new era of streamlined primary staining and coverslipping.

This HistoCore CHROMAX WS allows your team to focus on what truly matters, accurate and timely specimen preparation.

For In Vitro Diagnostic Use.

Quick Link

Histology Equipment

Tissue Processors

Microtomes

Cryostats

H&E Slide Stainers and Coverslippers

IHC, ISH & FISH Instruments

IHC & ISH Instruments

BOND RX Multiplex IHC Stainer

BOND-III Automated IHC Stainer

BOND-MAX IHC & ISH Stainer

Companion Diagnostics

IHC, ISH & FISH Consumables

IHC Primary Antibodies

Ancillaries & Consumables

Detection Systems

Molecular Solutions

Kreatech FISH Probes

Novocastra ISH Probes

Companion Diagnostics